Liability and AI: Product Liability Directive vs AI Liability Directive

The evolution of artificial intelligence (AI) in recent years has prompted significant legal developments within the European Union (EU). Two proposals - the revision of the Product Liability Directive and the proposed AI Liability Directive, represent the EU's effort to modernize liability laws and address the challenges posed by AI technologies.

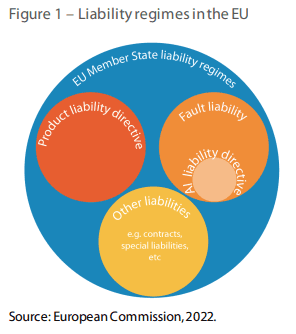

Although the names of the proposals don't suggest it, the two revised liability regimes are separate and apply in different situations. The PLD update aims to modernise the existing EU no-fault-based (strict) product liability regime and applies to claims made by private individuals against the manufacturer for damage caused by defective products. In contrast, the AI liability directive proposal aims to reform the national fault-based liability regimes and would apply to claims, made by any natural or legal person against any person, for fault influencing the AI system that caused the damage.

Here's a deeper dive into the main aspects of the two future pieces of legislation:

The Product Liability Directive (PLD) and AI

The Product Liability Directive (PLD) provides people who have suffered material damage from a defective product with the legal basis to sue the relevant economic operators and seek compensation. The revised PLD includes several key changes which aim to reflect the growing influence of digital services and software in the modern economy. Some of these changes include:

Expanded Scope

The PLD now holds manufacturers accountable for defects in both tangible and intangible components. The revised directive broadens the definition of 'product' to include software (and software updates) – whether embedded or standalone. This includes also AI systems. The updated definition also includes digital services, where they are necessary components for the functionality of the product with which they are interconnected or integrated (e.g. navigation services in an autonomous cars). The new PLD also broadens the scope of economic operators liable for product defects by introducing a layered approach to liability depending on the different qualification of the economic operator. The new rules will therefore also cover AI systems providers.

Defining Defectiveness

According to the updated PLD, a product is defective if it fails to ensure expected safety, considering its reasonable use, legal standards, and target user group's needs. Importantly, one of the elements considered when assessing defectiveness is the capacity of the product to continue to learn and acquire new features or knowledge. This element is meant to cover specifics related to AI and machine learning systems.

Damage Scope

Under the existing PLD, the producer is liable for defective products which have caused death, personal injury, or material damage. The updated PLD covers a wider range of damages, including psychological harm and the loss or corruption of data that is not used exclusively for professional purposes. Notably, the directive doesn't set a threshold for data loss to be considered a material loss.

Burden of Proof

Normally, claimants must establish a product's defectiveness and the damage causality. However, the Directive envisions the possibility for courts to shift the burden of proof relating to fault and causality from the claimant to the economic operator. This is possible specifically in cases with excessive technical or scientific complexity i.e., in many potential cases against AI system providers. Courts may require that the claimant proves only the likelihood that the product was defective or that its defectiveness is a likely cause of the damage.

Liability exemptions

Free, non-commercial open source software is exempt from the directive liability rules. The rules, however, apply when the software is supplied in exchange for a price or personal data used for anything other than improving the software’s security/compatibility. In addition, the Parliament pushed an exemption for manufacturers of software components to a defective product that were micro or small enterprises when they placed that software in the market. The exemption applies provided that another economic operator is liable.

The AI Liability Directive

In parallel with the PLD revision, the AI Liability Directive was proposed to specifically address damages caused by AI systems. Key aspects include:

Purpose and scope

The AI Liability Directive proposal complements the AI Act draft and uses the same defined concepts as that regulation (e.g., “high-risk AI systems”). It provides rules for a non-contractual, fault-based liability regime for damage caused by AI Systems. It applies to AI systems that are available on the EU market or operating within the EU market. Also, it applies to all categories of AI systems regardless of whether the relevant economic operator commercialising the products is established in the EU.

Presumption of Causality

The proposal introduces a 'rebuttable presumption of causality' for claimants, easing the burden of proving damage caused by AI.

This legal framework reduces the burden of proof for claimants, making it more feasible to establish a successful liability claim. Specifically, it establishes a causal link between non-compliance with a duty of care under applicable law (fault) of the defendant and the output produced by the AI system or the failure of such AI system to produce an output that gave rise to the relevant damage.

For high-risk AI systems, claims involving non-compliance with specific AI Act requirements automatically assume this causal connection in court, unless the defendant can prove the claimant has enough evidence and expertise to establish the link independently. For AI systems not classified as high-risk, this presumption applies only when proving causality is excessively challenging for the claimant.

However, the proposed approach does not reverse the overall burden of proof from the claimant to the defendant, aiming to prevent stifling AI innovation due to increased liability risks. Instead, it offers a targeted relief in proving how an AI system’s specific output led to harm, acknowledging the complexity and opaque nature of AI technologies.

Disclosure of Evidence

The AI liability empowers national courts to mandate the disclosure of evidence related to high-risk AI systems suspected of causing damage. This provision aims to assist claimants in accessing necessary evidence to identify liable parties.

Additionally, to ensure the efficacy of judicial processes, courts could order the preservation of evidence. Any disclosure or preservation order must be deemed necessary and proportionate to the claim, considering all parties' legitimate interests and safeguarding trade secrets and sensitive information. Should a defendant not comply with such orders, courts may assume the withheld evidence would demonstrate non-compliance with relevant duties, although defendants retain the right to contest this presumption.

Conclusion

The revised PLD proposal aims to modernise the existing EU no-fault-based (strict) product liability regime and would apply to claims made by privateindividuals against the manufacturer for damage caused by defective products. In contrast, the new AI liability directive proposes a targeted reform of national fault-based liability regimes and would apply to claims, made by anynatural or legal person against any person, for fault influencing the AI system that caused the damage.

Next steps

On 14 December 2023, the EU Parliament and the Council reached a political agreement on the revised PLD. As the next step, it should be formally adopted by the Council and the Parliament, and then published in the Official Journal of the European Union. The revised PLD will then have to be transposed into national legislation within 24 months. The new rules will apply to products placed on the market 24 months after entry into force of the new PLD.

On the other hand, the AI Liability Directive still needs to be considered by the European Parliament and Council of the European Union. Once negotiated and adopted, EU Member States will be required to transpose the terms of the AI Liability Directive into national law, likely within two years.

Siyanna Lilova

Jan 17, 2024

Latest posts

Discover other pieces of writing in our blog